Live FX - The Industry Standard for Image-based Lighting

Live FX can drive DMX-fixtures as well as video fixtures, and perform easy green screen previz tasks,

using our proven color and compositing engine.

Please see our feature matrix for feature-based differences between Live FX and Live FX Studio.

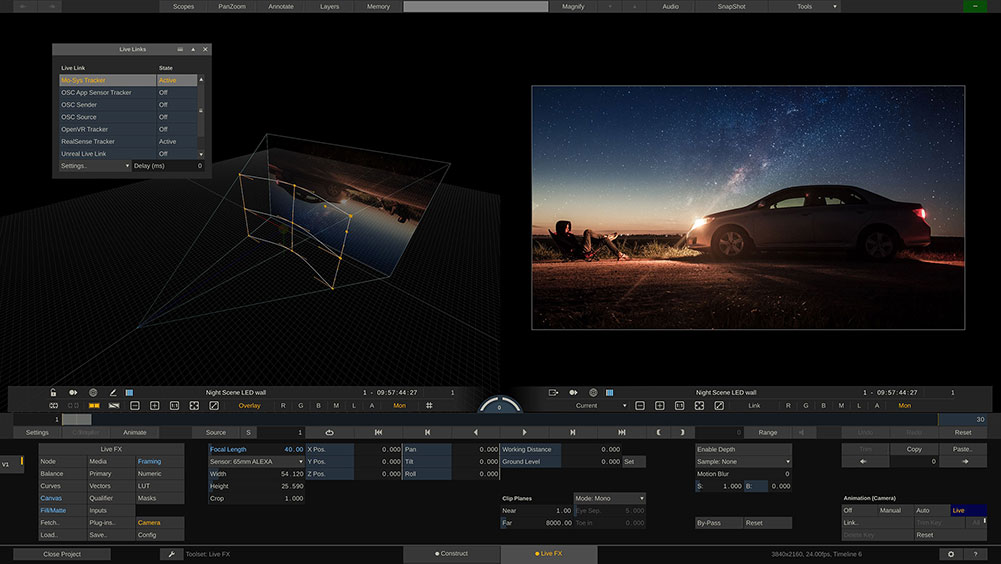

Live FX Studio - The Cinematographer's Media Server

Our solution for complete LED volume projection, Image-based Lighting and high end green screen live compositing. Live FX Studio comes with all the color tools from post, incl. an advanced transcoding and mastering engine to prep footage for any shoot in no time.

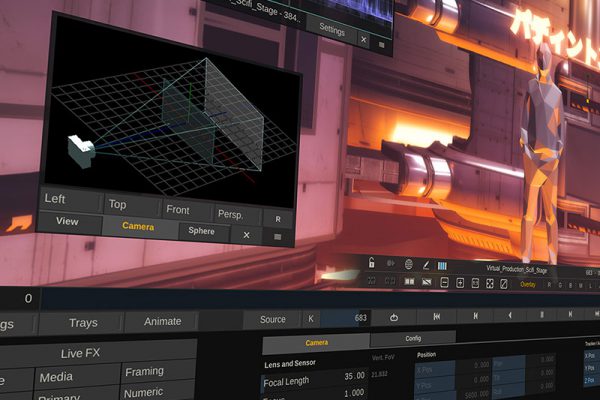

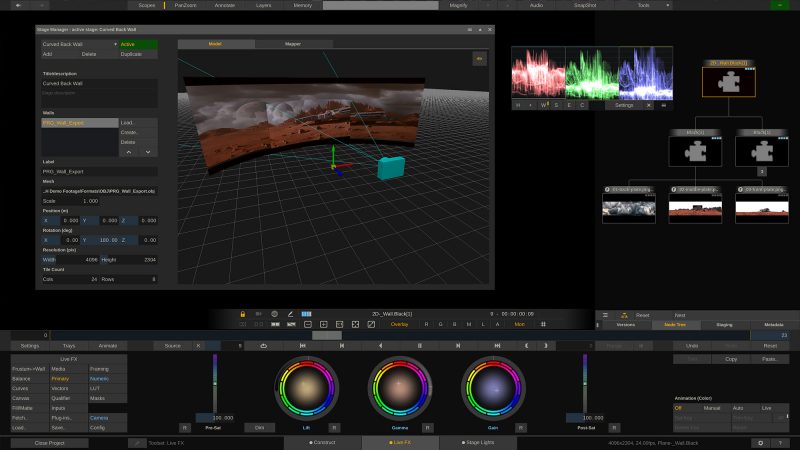

LED wall based Virtual Production

Live FX is a media server in a box. It can play back 2D and 2.5D content

at any resolution or frame rate to any sized LED wall from a single machine.

It supports camera tracking, projection mapping, image-based lighting and much more.

Native implementation of Notch Blocks and the USD file format allow to use Live FX

with 3D environments as well - but it doesn’t stop there! Through Live Link connection

and GPU texture sharing, Live FX can also be combined with Unreal Engine and Unity.

Live FX’s extensive grading and compositing features enable users to quickly implement

DoP feedback in real time and even allow for set extensions beyond the physical LED wall.

Image-based Lighting

Not only the content of the LED wall has to be controlled, but of course the lighting inside the studio has to play along with the projected content in order to create the perfect illusion. Thanks to the latest developments in lighting technology, modern fixtures can create fine grained and detailed lighting environments. Live FX can feed any number of lights by pixel-mapping the fixture to the underlying image content and control all fixtures via ArtNet or sACN protocol. Quick to setup and easy to operate - even on laptops, running even hundreds of universes simultaneously!

Green Screen based Virtual Production

Live FX ships with all the tools needed to do green screen keying, grading,

camera- and object tracking, as well as create advanced composites merging

the live camera signal with CG elements - be it simple 2D textures, equirectangular

footage, OFX plugins, Matchbox shaders or complete 3D environments from Notch, Unreal Engine or Unity.

On top of that Live FX can record the live composite as individual assets and turn the recorded material

automatically into a fully customizable offline composite, that is ready to play back as soon as the camera stops recording.

Virtual Production off the Stage

Live FX can not only be used on green screen and LED wall stages. Through its modest hardware requirements, it can also be brought on location for live composites in mission critical shoots, or for quick previz during location scouting. Live FX allows you to create the perfect illusion on any set and prep every bit of metadata for VFX-post. It is truly the most versatile piece of virtual production software on the planet. Check out various workflow models below!

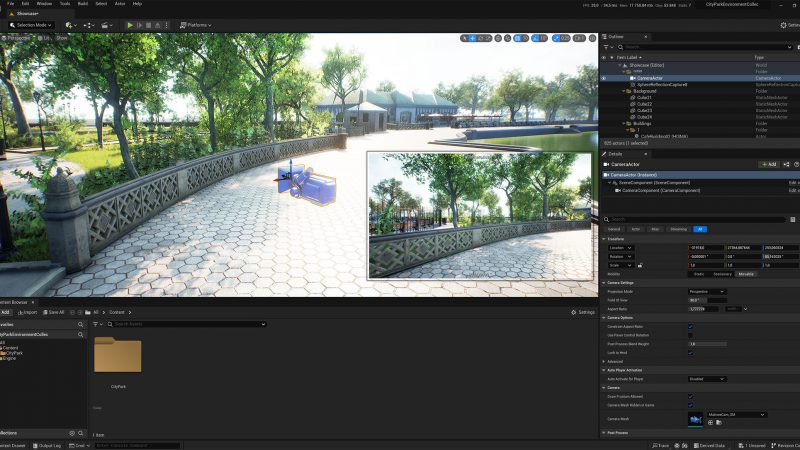

Creating real time in-camera VFX

Assimilate Live FX can load any footage at any resolution and any frame rate and play it out to an LED wall. By that we mean 2D elements, such as a simple PNG file, a Quicktime file or any camera RAW material that is suitable. This includes 360° material for which Live FX can even animate the field of view based on camera tracking data. But it doesn't stop there: Live FX allows to load complete 3D environments in the form of Notch Blocks and USD-scenes and tie the virtual camera to the physical camera on set!

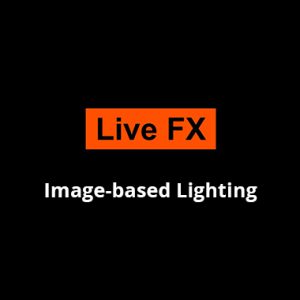

Projection Mapping

Configure your stage directly inside Live FX, or import OBJ Mesh-files of individual LED walls. Live FX will use the 3D setup of the stage and camera tracking data to calculate the correct perspective, for a realistic and immersive projection on all screens - no matter if 2D, 2.5D, 3D, Unreal Engine or any form of live signal. No matter if HD, UHD, 8K or resolutions beyond 16k, incl. the widest format support in the industry!

Did someone say DMX control?

Not only the content of the LED wall has to be controlled, but of course the lighting inside the studio has to play along with the projected content in order to create the perfect illusion. Live FX supports a multitude of light panels through DMX via ArtNet and sACN protocol and can control them based on image content. The LED wall shows a flickering fire? No problem - Live FX will let your stage lights flicker along in the same frequency and hue. The car chase scene enters a tunnel and the lights need to go dark at the same time? Not a problem either.

Set Extensions

Once the stage is set up in the Stage Mananger, Live FX will automatically create an alpha map of the stage, which allows users to easily apply a set extensions by capturing the live camera signal through a switcher node and apply a virtual background using the stage matte, generated by Live FX. Live FX can use any kind of 2D and 2.5D footage, as well as complete 3D environments from Unreal Engine, Unity and Notch for set extensions. Its extensive grading tools allow to colorgrade the virtual set extension and the live camera signal capturing the volume separately in order to blend them seamlessly.

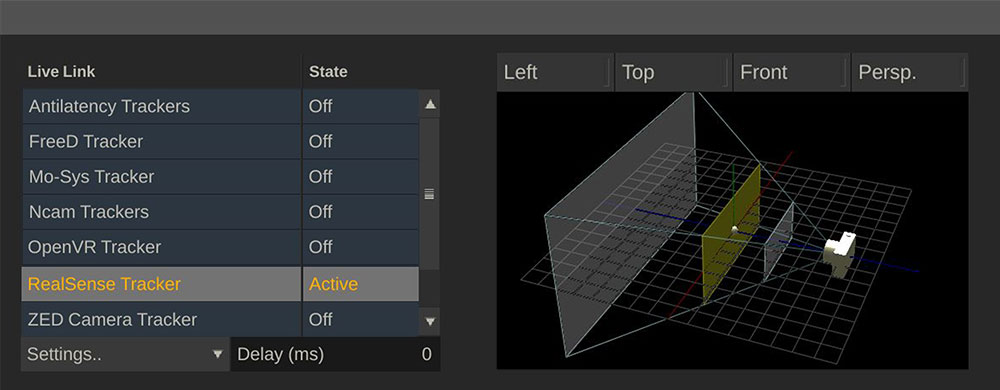

Camera Tracking for everyone

With LED wall based workflows, camera tracking becomes paramount. Live FX allows for a number of methods to track your camera, depending on budget and technology. Whether you're using Mo-Sys StarTracker, NCAM, StereoLabs, HTC Vive trackers, Intel RealSense or simply your smartphone with its internal gyro sensor or ARKit app - Live FX is ready for any budget and on-set situation. But even without a dedicated tracking device, Live FX can perform accurate camera tracking by reading out Pan, Tilt and Roll information that ships via the live SDI signal of the camera.

Previz and beyond

Our main job in virtual production setup is to merge live imagery with CGI-elements. Live FX ships with all the tools that professional artists are familiar with to create stunning composites. Besides the color tools, such as Color wheels, Curves, Vector grid and the ability to load Lookup Tables, Live FX features powerfull keyers, and allows to combine different keys through layers and node tree. Unparalleled format support allows you to import literally any kind of footage, with and without alpha channels, in any resolution, any frame rate and even in 360 equirectangular. Literally, the hardware underneath is the limit of what Live FX can do!

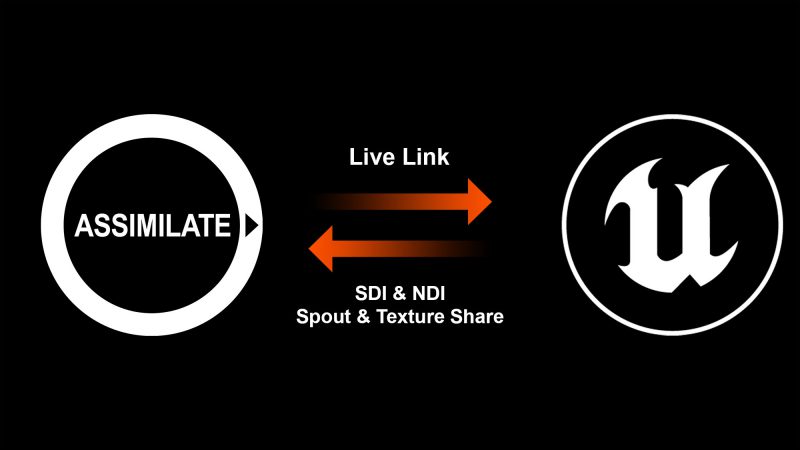

Live FX is not an island!

While Live FX has been designed as a one-stop-application, it provides a number of hooks to be integrated into existing virtual production workflows. Live FX can be fully controlled through Open Sound Control (OSC) and also send metadata, such as playback controls, camera positional data and other metadata via OSC to other apps. Through a dedicated live link, there is a direct integration of Live FX with Unreal Engine and Unity and if the apps run on the same machine, Live FX allows for direct texture sharing on the GPU for absolute zero-latency image exchange!

Livegrading the Volume

Whether you're using Live FX to send content to the LED wall, or just piping through content from another source like Unreal Engine, Live FX ships with a complete finishing toolset, allowing to livegrade not only the volume itself, but also the live camera signal in order to merge it perfectly with the digital background. Through it's intuitive layer stack, Live FX allows to add an unlimited amount of layers to add other 2D and 3D elements into the scene with just a couple clicks.

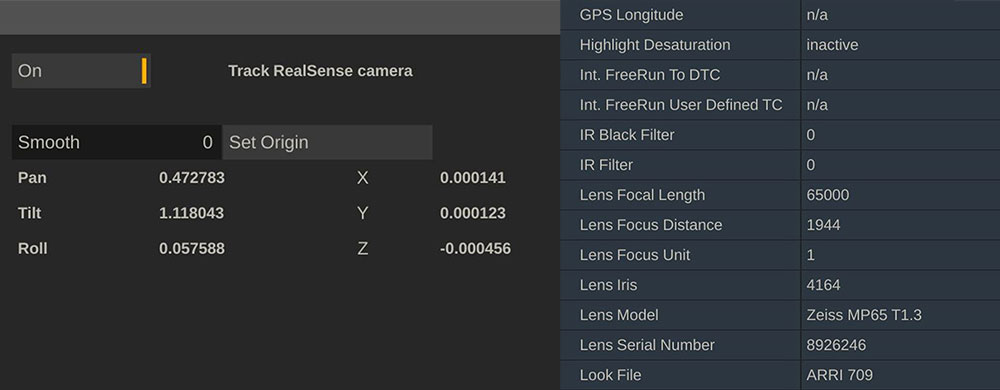

Metadata, metadata and nothing but metadata

Capturing metadata has been Assimilate's strong suit ever since. With virtual production workflows, capturing metadata is a must and Live FX makes it as easy as can be. Whether it's live SDI metadata from the camera itself, tracking data from Intel RealSense or user-input in the form of on-screen annotations or Scene&Take info - any- and everything metadata is being captured along the way, ready to use in post!

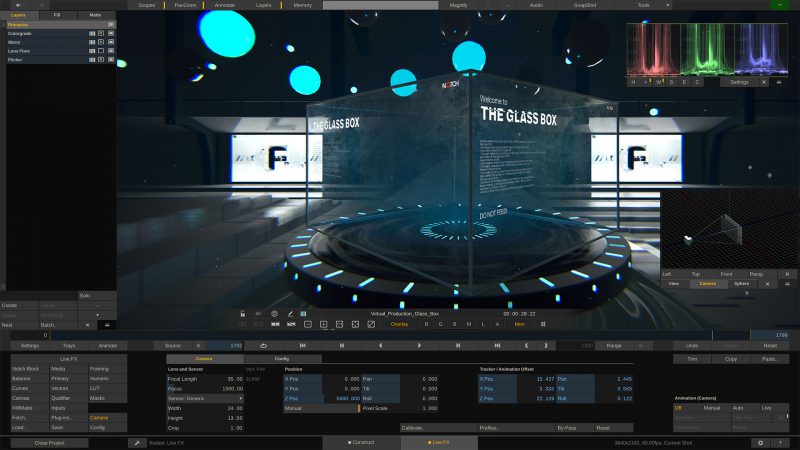

Notch Blocks & USD - next gen 3D environments

With Notch Builder, you can create amazing motion graphics and interactive VFX in real-time. These 3D environments can be exported as a Notch Block and be imported as a virtual background into Live FX Studio*. Next to Notch Blocks, Live FX Studio supports 3D environments in the USD (Universal Scene Descriptor) format, which opens the door to plenty of photogrammetry-based scenes and backgrounds. Inside the Live FX Studio project, you can link the camera tracking information from the physical camera to the virtual camera inside the 3D scene. The output from the virtual camera is then used to replace the green screen, or feed the LED wall via projection mapping. Live FX Studio can animate more than just the camera position and FOV: Any object or property of the scene is adjustable, or can be linked to an incoming live parameter. Notch Blocks can also have any number of inputs, that Live FX Studio can feed textures or plugins into, in order to create the perfect live composite.

*requires a Notch Playback license, which can be obtained from the Notch website.

The king of plate playback

Having over 20 years of background in post production and transcoding, Live FX features support for an excessive amount

of video formats and codecs - including Apple ProRes, NotchLC and common H264/H265 encoded content.

Live FX can handle any resolution and any format, from 2D plates over 2.5D assets, 180 and 360° content in any shape

as well as 3D environments like Notch Blocks and USD scenes.

On top of that comes Assimilate's award winning colorgrading and compositing toolset, which allows creative professionals

to tweak any part of the image with the turn of a knob or the click of a button.

Through Live FX's projection mapping engine, the content can be projected onto any LED volume of any size, resolution and shape.

2D Content

Live FX can load 2D image content of any resolution and project it in a number of ways onto the LED volume.

To achieve perfect projection, it utilizes camera tracking information to compensate for any distortion caused

by the shape of the LED volume or the camera position and angle towards it. Users can choose from two projection modes: Frustum and Planar projection.

This gives greater freedom for camera operators even with simple 2D plates.

2.5D Workflows

Being a live compositor at heart, Live FX allows to quickly arrange 2D layers in 3D space, and this way

create a photorealistic parallax effect. The content can range from pre-recorded and rotoscoped footage

to computer and AI generated stills with dynamic elements like fog and snow composited in.

180/360° Wall projection

Live FX has been enabled for equirectangular content from the start.

The projection with 180 and 360° clips is quickly set up and the perspective dialed in.

VP operators can choose betweek spherical and dome projection and also use the parts of the image

not on the LED volume for image-based lighting in one go. Live FX supports any kind of VR format - e.g. cubic, cubic packed, equirectangular, mesh and cylindrical.

3D Environments

While Live FX supports a workflow with Unreal Engine as well as with Unity, it can also load 3D environments directly.

Supported formats (on Windows only at this point) are Notch Blocks and USD (Universal Scene Descriptor).

This enables super efficient workflows completely independent from game engines even on modest hardware.

Ultimate Image Control

Coming from 20 years of doing colorgrading and finishing, Live FX offers all the grading and compositing tools

we know from post production workflows: Keyers, Masks, Curves, Color Wheels and much more.

Live FX allows to create stunning composites live on-set within seconds.

Sky replacements, green screen composites, selective colorgrading and keying as well as layering, tracking,

keyframe animations and the use of OFX plugins and Matchbox shaders - all in real time and full floating point.

There are literally no limits to the amount of image control Live FX offers.

Simpler. Better. Faster.

By not relying on ndisplay, Live FX provides an easier, faster to setup workflow with Unreal Engine. With Live FX, all you have to do, is feed the output of the cine camera actor in Unreal into Live FX. There it will go through the projection engine to be correctly projected onto the LED volume. With that, you are also perfectly equipped for virtual set extensions, done easy and fast.

Live Link into Unreal

By using the dedicated Live Link plugin (available here), Live FX can forward time-stamped camera tracking information, as well as playback and record state along with Scene and Take information to Unreal Engine. Inside Unreal, the incoming metadata can be linked to the cine camera actor and take recorder to run fully in sync with Live FX.

Connection any way you want

There are a number of ways to receive back the image output from Unreal Engine. If Unreal and Live FX are running on one and the same system, the image transfer can be kept entirely on the GPU, by using the Unreal Textureshare SDK, or Spout. With this, there is close to no latency at all between Unreal and Live FX. If Unreal Engine resides on a separate workstation, the image transfer can be done via SDI, or NDI.

Set Extensions

Since Live FX receives the output of the cine camera actor in Unreal, it is straight forward to build virtual set extensions. The camera output can be captured back into Live FX and get overlayed with an automatically generated alpha of the entire stage. This allows Live FX to place the image feed straight from Unreal into all areas of the image, that are not covered by an LED screen. And all that on the same system that is driving the LED Volume. Creating virtual set extensions has never been easier!

Unity and Blender Workflows

Through its highly flexible OpenSoundControl (OSC) interface, Live FX can easily be hooked up with any game engine or 3D software. Fetching the image output back works the same way as in Unreal: Via texture sharing directly on the GPU, or via SDI and NDI. This opens up a completely new way of working in virtual production!

Start easy - advance fast

Shooting green screen starts with being able to key the green and replace it with a background of your choice. Live FX ships with HSV-, RGB-, Vector-, Chroma- and Luma-Qualifiers, that can be combined to generate the ultimate alpha channel. If that is all you need, great! You can output the full composite and the generated alpha channel separately via SDI, NDI or directly from the GPU via HDMI or DisplayPort to e.g. Unreal Engine! If you plan on bigger things, read on...

Green screen replacement & Grading

The next logical step is to replace the keyed green screen with another image. Live FX can load literally any format, including all popular camera RAW formats and even 360° content or complete 3D Notch Blocks to fill the background. The background can then be scaled and positioned as needed. Camera live feed and digital background can be color graded separately, as well as together to create the perfect illusion. Live FX even allows the usage of OFX plugins and Matchbox GLSL shaders directly inside the composite and applies them in real time. Even more so, per-frame metadata through the camera SDI, such as focal distance, can directly be linked to any parameter in Live FX - to e.g. blur a texture based on the camera lens' focus position.

Object- and camera tracking

Time to take it up a notch: Live FX features various different tracking techniques to offset the digital background based on camera movement. In many scenarios, the live object tracker can be sufficient in creating a parallax effect on the background image, which can be linked to the tracker. But of course Live FX also features a virtual camera, which can be linked directly to the physical camera on set: Whether using the camera's own gyro SDI-metadata, or a dedicated tracking device, like Mo-Sys Star Tracker, NCAM, StereoLabs, Intel RealSense or HTC Vive trackers - Live FX offers the most flexibility to capture camera tracking information and create a virtual 3D scene using 2D and 3D elements.

Record everything - incl. metadata

On virtual production shoots, metadata is a precious good. That's why Live FX will record all and any metadata from the tracking device, camera SDI or even user-input data such as Scene & Take, annotations and more into side car files that are ready to use for vfx-post. Users can record the live camera feed untouched, or the complete composite to ProRes, DNx MXF or H264. If the recording format allows for an alpha channel (such as ProRes 4444), the created alpha channel will be recorded as well!

Prep for VFX & Post Production

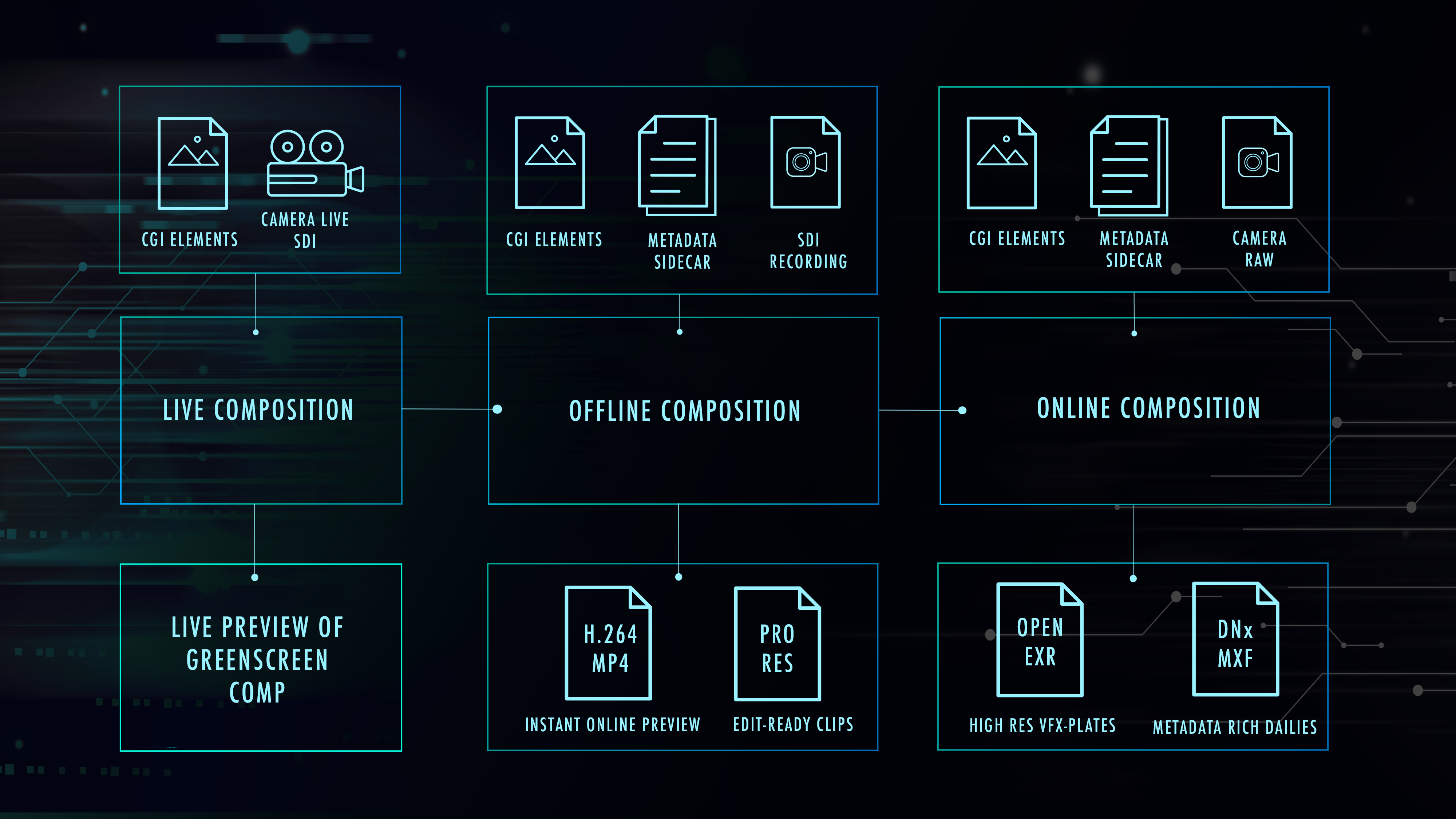

Live FX allows for a unique and highly efficient workflow towards post: Start with the live composite and record the raw feeds and metadata separately. As soon as the recording stops, Live FX automatically creates an offline composite that is ready for instant playback and review. The offline composite is a duplicate of the live composite, using the just recorded files instead of the live signals. It also includes all other elements that were used in the live composite and provides the recorded animation channels for further manipulation. Once the high resolution media is offloaded from the camera, Live FX automatically assembles the online composite and replaces the on-set recorded clips with the high quality camera raw media. These online composites can be loaded into Assimilate’s SCRATCH® to create metadata-rich dailies or VFX plates in the OpenEXR format, including all the recorded frame-based metadata.

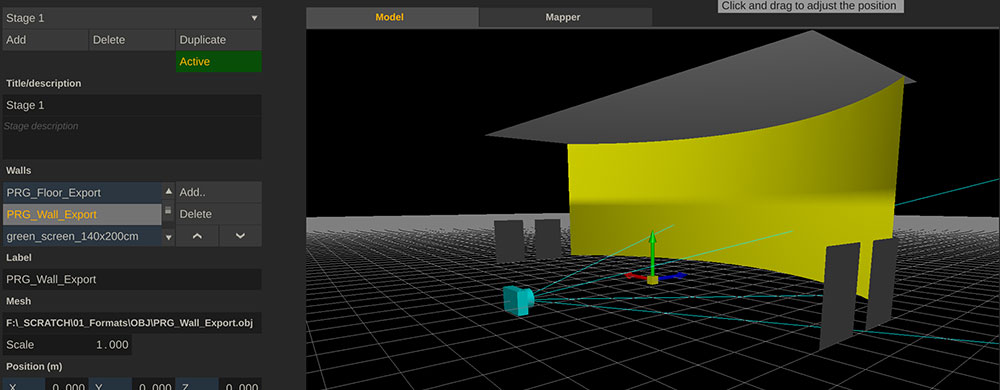

Complete control over stage lighting

Dynamic lighting based on image content is a must-have in virtual production to create the perfect illusion.

Live FX allows to not only drive the LED volume, but the stage lighting as well.

For this Live FX supports DMX-enabled fixutres, as well as video fixtures and can be controlled

via a convenient web interface or hooked up to a lighting console!

Support for DMX Fixtures

Live FX can control any DMX-enabled fixture with any channel layout.

Pre-configured channel layouts can be imported via GDTF template or directly downloaded from the Open Fixture Library.

Supported protocls are sACN, Art-Net and direct USB-to-DMX interfaces.

For a more granular effect, fixtures with multiple pixels can be segmented, colorgraded and color managed for ultimate lighting control.

Support for Video Fixtures

Video fixtures like the Kino Flo Mimik can be controlled in the same way as DMX-based fixtures.

Live FX generates a so-called Fixture Mosaic, that is being sent to the LED processor, controlling the video fixtures.

Of course, any fixture can also be color graded, color managed and sampled from anywhere in the picture.

Hook up any Lighting Console

Live FX can be hooked up to any lighting console via sACN.

The lighting console controls virtual fixtures inside Live FX, which in turn are linked to

physical fixtures on stage.

This way, the gaffer has ultimate control over the stage lighting and can even add light cards on the fly.

Assimilate provides a GDTF template to make patching Live FX fixtures as fast and straight forward as possible.

Web Interface

For smaller setups, Live FX ships with a built-in webserver, proving an easy to use web interface

to quickly adjust important aspects and controls of any fixture, or fixture group.

It's set up in seconds and allows to change stage lighting from any place within the stage via smartphone or tablet.

Pixel Mapping and Fixture Grading

Pixel mapping has never been easier! In Live FX every fixture can either be set to a defined color,

or set to sample image content. That can be a clip played off from disk, or a live feed (from another media server or Live FX system for instance).

The sample region is easily defined by dragging a box in right in the viewport.

On top of that, every fixture can be color graded, using a fast and easy-to-use grading toolset.

Live FX allows to colormanage fixtures - no matter which color space and transfer function the underlying imagery is encoded with,

it can be transformed to the color space and transfer function of the fixture.

| Live FX | Live FX Studio | |

|---|---|---|

| Green Screen Tools ⓘ | ✓ | ✓ |

| Image-based Lighting ⓘ | ✓ | ✓ |

| Lighting Console DMX Support | ✓ | ✓ |

| Sync Players | ✓ | ✓ |

| Multi-cam Support | ✓ | ✓ |

| Syncing of multiple Live Feeds | ✓ | ✓ |

| Software Object Tracker | ✓ | ✓ |

| RTMP Streaming | ✓ | ✓ |

| NDI Capture & Output | ✓ | ✓ |

| Open Sound Control (OSC) | ✓ | ✓ |

| Source Recording & Offline Comp | ✓ | ✓ |

| Live Link to Unreal Engine | ✓ | ✓ |

| Remote Control | ✓ | ✓ |

| OFX Plugin Support | ✓ | ✓ |

| Matchbox Shader Support | ✓ | ✓ |

| Notch Block Support | ✓ | ✓ |

| USD Format support | ✓ | ✓ |

| Basic Camera Tracking ⓘ | ✓ | ✓ |

| FreeD tracking protocol support ⓘ | ✓ | ✓ |

| HTC Mars Camera tracker | ✓ | ✓ |

| Mo-Sys Camera tracker | · | ✓ |

| Ncam Camera tracker | · | ✓ |

| Stype Camera tracker | · | ✓ |

| OptiTrack Camera tracker | · | ✓ |

| Vicon Camera tracker | · | ✓ |

| Antilatency Camera tracker | · | ✓ |

| OpenVPCal LED Wall Calibration | · | ✓ |

| Editing & Rendering Toolset | · | ✓ |

| Projection Mapping | · | ✓ |

| Remote Grading | · | ✓ |

| Video Router Control ⓘ | · | ✓ |

| LED wall control | · | ✓ |

| Offline/Online Comp Conform | · | ✓ |

| BUY | BUY |

Need help?

• Check our user guide for Live FX

• Install recommended Video-IO Drivers for AJA, Blackmagic and Bluefish444

• Watch our Live FX Hands-on Video Tutorials

• Join our Facebook Community

• Get in touch with our Support Team

Download

Download Windows Download macOS

Visit our support site for Recommended Drivers, Archived Versions and Release Notes, and the Live Link plugin for Unreal Engine.

Learn to use Live FX to the Fullest

Learn more about Live FX by watching one of the many tutorials available.

Browse and search the user manual on our support site, or contact support at support@assimilateinc.com.

Pick My Plan

TRY Buy Live FX Buy Live FX Studio

Live FX/Live FX Studio is available as:

- a monthly automatic recurring subscription - $345/$695 USD

- a 3-month project license - $895/$1595 USD

- a 1 year rental - $1495/$4995 USD

- a permanent license (incl. 1 year of support & updates) - $2495/$7495 USD

- a site license (contact sales for more info)

Each license comes with full access to the latest version and all updates of the software, as well as access to our technical support team.

Note that a permanent license comes with 1 year of support. After that, you can continue to use the software but to be eligible to further software updates or contact to our technical support team, you need to extend your support contract.

A site license offers you an unlimited number of licenses to be used within your facility.

Please contact sales for more info.

What you need to run Live FX

OS: Windows 10, macOS 10.15 (Catalina) and up.

CPU: Any modern Apple, Intel or AMD processor.

GFX: Any modern graphics card. Preference for high end graphics: Apple Silicon / NVIDIA Quadro / AMD Radeon PRO. Note that on systems with standard Intel graphics not all features might be supported.

RAM: Min 8Gb. Preferred 12Gb or more.

SDI (optional): AJA, Blackmagic, Bluefish444.

See detailed system requirements here.